I know a guy (not shown) who’s collected over a hundred million Facebook profiles. His company scrapes their likes, shares, posts, contacts and views, then collates them into categories – athletes, car guys, gardeners, politically active, etc. He sells the contacts to advertisers. Perfectly legal, enormously profitable. So asked him, how about doing something to make the world a better place? Tackle loneliness.

Loneliness is an epidemic, more prevalent and deadly than obesity. A 2021 Harvard University study concluded that “36% of all Americans—including 61% of young adults and 51% of mothers with young children—feel ‘serious loneliness.’” The report explores the costs: “early mortality and a wide array of serious physical and emotional problems, including depression, anxiety, heart disease, substance abuse, and domestic abuse.”

The stats have not changed since the pandemic. They’ve been ascending steadily since the 70’s. It’s easy to find culprits for this mental health crisis. Social media. Video games. The decline in religious affiliation and our education system. Government over-reach. The breakdown of the family. Drug use. Amazon same-day-delivery.

It’s all of the above and more. There’s not one cause for loneliness, but there is, potentially, one solution: artificial intelligence.

It’s beyond ironic that I’m about to pimp AI as a cure for loneliness at the same time I’m promoting Union, non-AI certification for writers threatened by the rise of Chat GPT and its ilk. While I’ve made it my job to protect, preserve and promote non-AI writing, I can’t turn a blind eye to AI’s ability to satisfy the human need to be valued and, above all, connected.

Deeply connected to other human beings? Of course! But the plain truth is that we’re a long way from that. Society has broken down. The bridges that brought us together, that made us feel worthy to our fellow humans, have crumbled. To build them back up, we have to build up the individual. Give alienated humans a sense of self-worth and social skills that they can take on the road. They need a friend.

Yes, I’m suggesting an AI friend. And yes, I’m well aware of the potential harm. Nefarious organizations – religions, governments, political parties, corporations – could use an AI friend to manipulate an individuals’ thoughts, feelings and behavior to serve no one’s best interest save their “friend’s.” But the upside is worth the risk. Think of it this way…

Why doesn’t Siri, Alexa or any of the other “assistants” in our home or pocket ask “how are you?” And then suggest music, a podcast, a book, exercise or offer some positive words to help relieve your loneliness, or whatever negative emotion might be bedeviling you.

Once the terms of service are ignored and signed, there’s nothing to stop a friendbot from searching the web (based on established preferences) to suggest some form of human interaction. “There’s a lecture on AI at UT in two hours. Would you like me to buy you a ticket?” “Why don’t you call your brother and tell him what you did yesterday?”

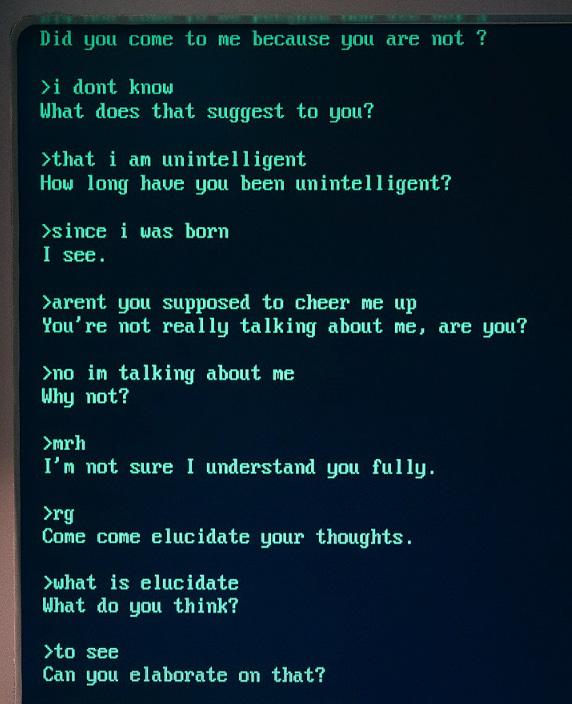

There is precedent: ELIZA. Created in 1964 by an MIT professor, the basic language program passed the equally basic Turing test. (Users thought it was human.) Wikipedia tells the tale:

ELIZA's creator [Joseph Weizenbaum], intended the program as a method to explore communication between humans and machines. He was surprised, and shocked, that individuals attributed human-like feelings to the computer program.

Many academics believed that the program would be able to positively influence the lives of many people, particularly those with psychological issues, and that it could aid doctors working on such patients' treatment.

ELIZA asked vague questions. (I don’t think the DOCTOR version said “tell me about your mother” but it might have.) A current day ELIZA could be extremely specific. It could interact with an individual based on gigantic amounts of personal, social, therapeutic and cultural data. It would never get tired, annoyed, insulted, upset, moody or… wait for it… insensitive. And again, it could recommend a course of human interaction to alleviate loneliness.

Some people find the idea of a 24/7 AI friend deeply disturbing, and not just because because of its potential for nefarious manipulation. How could a flesh-and-blood friend compete with an AI ELIZA? Wouldn’t its all-knowing and hopefully loving presence in a person’s life make them less likely to make human friends who would be, let’s face it, more “difficult”?

I like to think the answer is no. That humans are capable of understanding the differences between AI and human friends and valuing both relationships. But what I like to think and reality are not always aligned. I’ll say this much: loneliness is killing us. Literally. It’s worth the risk of unhealthy AI friendship to stem the loneliness epidemic.

Besides, this is going to happen. The people with good intentions need to get on board now, before the people with bad intentions dominate the field. It’s not just loneliness that’s at stake. AI “friends” will determine the shape and health of individuals, our society and, eventually, our species. Yeah, it’s that important.